Back to

research

Projects J.A.M. van Gisbergen

1. Role of top-down signals in visual motion

detection

2.

Dynamic perceptual updating by vestibular signals

3. Central processing of

vestibular signals for spatial orientation

4. Orientation constancy in

spatial perception

5. Interactions between

control signals of visual and vestibular origin in the generation of rapid eye

movements.

6. Neural mechanisms for the

control of binocular eye movements in direction and depth.

7. Gaze control by eye-head

coordination

1. Role of top-down signals in visual motion detection

Research group: Maaike de Vrijer, Pieter Medendorp en Jan van Gisbergen

Introduction

Detecting

true object motion during self-motion, although seemingly effortless, has to overcome

two nontrivial problems. First, integrate the local motion information derived

from an early fine-grain analysis (the motion seen through many ‘peepholes’)

into a global motion pattern. Second, distinguish object motion from the

effects of self-motion. So far, these problems have been seen as separable and

amenable to sequential analysis. Here, we propose that it would be more optimal

to solve them in conjunction. To evaluate this idea, we propose novel

experiments exploring the conditions for the detection of true object motion

during self-motion.

Report

The

project has just started.

2 Dynamic perceptual updating by vestibular signals

Research group: Rens Vingerhoets, Pieter Medendorp

and Jan Van Gisbergen

Introduction

Major issues in the study of spatial orientation concern the questions of how

the brain detects self movement and how this information is used to maintain a

stable percept of external space. In

this project, perceptual stability will be tested on a moment to moment basis

while the subject is continuously rotated about a tilted (off-vertical) axis.

In the absence of visual cues, the brain depends strongly on vestibular

information to reconstruct head rotation and head position in space. The

semicircular canals signal head rotation but they only detect angular

acceleration so that their signal dies out as rotation continues. The otoliths

detect linear acceleration forces but they cannot distinguish between the pull

of gravity and the effect of translation. Interpretation of their ambiguous

signal requires integration of available rotation signals by an internal model

in the brain. When these body rotation signals are reliable, as during rotation

in the light, percepts of body movement are roughly veridical. However, during

fast off-vertical rotation in the dark (i.e., about a tilted axis), subjects

have illusory translation and tilt percepts that do not correspond to the

actual pure rotation stimulus. This combination of illusory effects has been

interpreted in terms of incorrect otolith signal disambiguation in conditions

where rotation information from the canals is misleading. To really test this

canal-otolith interaction hypothesis, quantitative perceptual data at high

temporal resolution are essential. The project is designed to provide such data

for the first time. The major objective in collecting these data is to test to

what extent current dynamic orientation models, which have been based and

tested mainly on oculomotor data, can also be applied to perception.

Report

Human spatial orientation relies on vision, somatosensory cues and

signals from the semicircular canals and the otoliths. The canals measure

rotation, while the otoliths are linear accelerometers, sensitive to tilt and

translation. To disambiguate the otolith signal, two main hypotheses have been

proposed: frequency segregation and canal-otolith interaction. So far these

models were based mainly on oculomotor behavior. In this study we investigated

their applicability to human self-motion perception. Six subjects were rotated in yaw

about an off-vertical axis (OVAR) at various speeds and tilt angles, in

darkness. During the rotation, subjects indicated at regular intervals whether

a briefly presented dot moved faster or slower than their perceived selfmotion.

Based on

such responses, we determined the time course of the selfmotion percept and characterized its steady-state by a

psychometric function. The psychophysical results were consistent with anecdotal

reports. All subjects initially sensed rotation, but then gradually developed a

percept of being translated along a cone. The rotation percept could be

described by a decaying exponential with a time constant of about 20 s.

Translation percept magnitude typically followed a delayed increasing

exponential with delays up to 50 s and a time constant of about 15s. The

asymptotic magnitude of perceived translation increased with rotation speed and

tilt angle, but never exceeded 14 cm/s. These results were most consistent with

predictions of the canal-otolith interaction model, but required parameter

values that differed from the original proposal. We conclude that canal-otolith

interaction is an important governing principle for self-motion perception that

can be deployed flexibly, dependent on stimulus conditions.

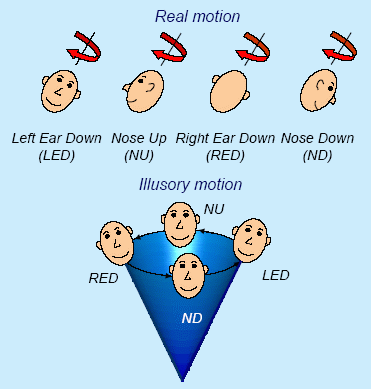

Rotation about an axis tilted relative to

gravity generates striking illusory motion percepts. As the initial percept

of body rotation gradually subsides, a feeling of being translated along a

cone emerges. The direction of the illusory conical motion is opposite to

the actual rotation.

Recent publications

·

Vingerhoets, R.A.A. , Medendorp, W.P.

and Gisbergen J.A.M. van

Time course and magnitude of illusory translation perception during off-vertical

axis rotation

J. Neurophysiol. 95:

1571-1587, 2006 (pdf)

- Vingerhoets, R.A.A., van Gisbergen,

J.A.M. and Medendorp, W.P.

Verticality perception during off-vertical axis rotation.

J. Neurophysiol. 97, 3256-3268, 2007 (pdf)

3. Central processing of

vestibular signals for spatial

orientation

Research group: Ronald Kaptein and Jan Van Gisbergen

Introduction

The vestibular system is important for the detection of the direction of

gravity and for the analysis of body movements, which generally consist of

various combinations of rotations and translations. For this purpose, it has

specialized sensors (canals and otoliths), but limitations in their coding

properties complicate the analysis. The canal afferents are insensitive to low

rotation frequencies. The otoliths, for elementary physical reasons, cannot

distinguish between the inertial forces caused by translation and those exerted

by tilt relative to gravity. Yet, this tilt/translation distinction is vital

for survival. The problem how the brain achieves otolith disambiguation, a hot

topic surrounded by controversy, is the central theme of this proposal. The

project will investigate the consequences of shortcomings in the disambiguation

process for spatial orientation and spatial localization.

Report

Using vestibular sensors to maintain visual stability during changes in head

tilt, crucial when panoramic cues are not available, presents a computational

challenge. Reliance on the otoliths requires a neural strategy for resolving

their tilt/translation ambiguity, such as canal-otolith interaction or

frequency segregation. The canal signal is subject to bandwidth limitations. In

this study, we assessed the relative contribution of canal and otolith signals

and investigated how they might be processed and combined. The experimental

approach was to explore conditions with and without otolith contributions in a

frequency range with various degrees of canal activation.

We tested the perceptual stability

of visual line orientation in six human subjects during passive sinusoidal roll

tilt in the dark at frequencies from 0.05 to 0.4 Hz (30 deg peak-to-peak). Since subjects were

constantly monitoring spatial motion of a visual line in the frontal plane, the

paradigm required moment-to-moment updating for ongoing ego motion. Their task

was to judge the total spatial sway of the line when it rotated sinusoidally at

various amplitudes. From the responses we determined how the line had to be

rotated to be perceived as stable in space. Tests were taken both with (subject

upright) and without (subject supine) gravity cues.

Analysis of these data showed that

the compensation for body rotation in the computation of line orientation in

space, while always incomplete, depended on vestibular rotation frequency and

on the availability of gravity cues. In the supine condition, the compensation

for ego motion showed a steep increase with frequency, compatible with an

integrated canal signal. The improvement of performance in upright, afforded by

graviceptive cues from the otoliths, showed low-pass characteristics.

Simulations showed that a linear combination of an integrated canal signal and

a gravity-based signal can account for these results.

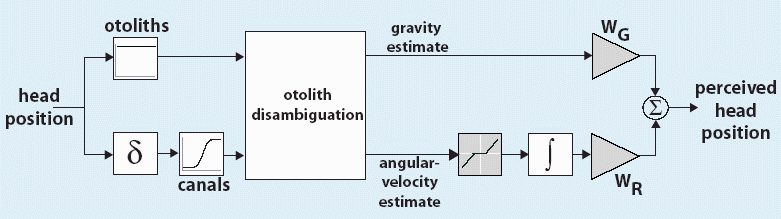

Linear summation of graviceptive and rotational

cues.

First,

separate estimates of the direction of gravity and angular velocity are obtained.

These are weighted and added to obtain the estimate of head rotation. Two

disambiguation strategies, frequency segregation and canal-otolith interaction,

were simulated.

Model fits.

Both versions of the model can

mimic the high-pass behavior in both conditions. The filter model, relying on

frequency segregation for disambiguating the otolith signal, reproduces the

low-pass characteristics of the otolith signal most accurately. To explain the

data, both models require strong canal contribution.

Recent publications:

Kaptein, R.G.,

Gisbergen, J.A.M. van

Interpretation

of a Discontinuity in the Sense of Verticality at Large Body Tilt.

J Neurophysiol 91: 2205-2214, 2004

Kaptein, R.G. and

Gisbergen, J.A.M. van

Nature

of the transition between two modes of external space perception in tilted

subjects.

J. Neurophysiol. 93: 3356-3369, 2005

Kaptein, R.G. and

Gisbergen, J.A.M. van

Canal

and otolith contributions to visual orientation constancy during sinusoidal

roll rotation

J. Neurophysiol 95: 1936-1948, 2006 (pdf)

Kaptein,

R.G.

Vestibular

Contributions to visual stability

PhD thesis, Radboud University Nijmegen, 2006 (pdf)

4. Orientation constancy

in spatial perception

Research group: Anton van Beuzekom and Jan van

Gisbergen

Introduction

One of the key questions in spatial perception is whether the brain has a

common representation of gravity that is generally accessible for various

perceptual orientation tasks. To evaluate this idea, we compared the ability of

passively and actively-tilted subjects to indicate earth-centric directions in

the dark with a visual and an oculomotor paradigm and to estimate their body

tilt relative to gravity.

Report We compared the ability of

passively-tilted subjects to indicate earth-centric directions in the dark with

a visual and an oculomotor paradigm and to estimate their body tilt relative to

gravity. Subjective earth-horizontal and earth-vertical data were collected,

either by adjusting a visual line or by making saccades. In all spatial

orientation tests, whether involving space-perception or body-tilt judgments,

passively-tilted subjects made considerable systematic errors which mostly

betrayed tilt underestimation (Aubert-effect) and peaked near 130 deg tilt.

However, the Aubert-effect was much smaller in body tilt estimates than in

spatial oculomotor pointing, implying that the underlying signal processing

must have been different. Furthermore, in actively-tilted subjects, the body

tilt estimates became much better but space perception performance was

virtually identical to the passive tilt condition.

These findings are discussed in the context of a conceptual model in an attempt

to explain how the different patterns of systematic and random errors in

external-space and self-tilt perception may come about.

Recent publications

Van

Beuzekom AD, and Van Gisbergen JAM (1999)

Comparison of tilt estimates based on line settings, saccadic pointing and

verbal reports.

Ann N Y Acad Sci 871: 451-454.

Van Beuzekom AD, and Van Gisbergen JAM (2000)

Properties

of the internal representation of gravity inferred from spatial direction and

body tilt estimates.

J Neurophysiol 84: 11-27.

Van Beuzekom AD, Medendorp WP, and Van Gisbergen JAM (2001)

The subjective vertical and the sense of self

orientation during active body tilt.

Vision Research 41: 3229-3242.

5. Interactions between

control signals of visual and vestibular origin in the generation of rapid eye

movements.

Research group: Anton van Beuzekom and Jan Van Gisbergen

Introduction

Rapid eye movements to different sensory inputs share a common final pathway.

There is clear evidence that the pathways for saccades to visual, auditory and

tactile targets have already converged at the level of the superior colliculus

(SC) where movement vectors are coded spatially in a motor map. It is not clear

whether this applies also to vestibularly induced rapid eye movements (quick

phases). Neural activity related to quick phases has been demonstrated in the

SC but its functional significance remains uncertain. Another possibility to be

considered is that quick phase signals enter the saccadic pathway at a more

peripheral stage where signals are coded temporally, separately for each

component, i.e. at the level of burstcells. Accordingly, there are at least two

levels of possible interaction between visual and vestibular signals for the

generation of rapid eye movements (vector and component stage). When both

visual and vestibular inputs impinge on the system, interesting interactions

occur whose precise nature and neural basis have hardly been studied. This

project was designed to study these interactions behaviorally and neurophysiologically.

Our aim was to elucidate how the SC participates in the control of vestibularly

induced rapid eye movements and to clarify to what extent this role and the

interaction findings can be reconciled with its role in foveation.

Report To investigate these issues, we

have studied in the monkey to what extent saccades induced by electrical

microstimulation in the SC (E-saccades) can be modified by concurrent

sinusoidal rotation about a vertical axis. We found clear kinematic effects

which cannot readily be understood by interactions at this level. Vectorial

peak velocity in E-saccades of a given amplitude increased with head velocity

for rotations into the half field where the saccade was directed and decreased

for rotation in the opposite direction. Interestingly, this effect was due to a

specific effect on the horizontal component; the vertical component did not

show systematic kinematic changes. We suggest that the kinematic effect may be

due to preparatory quick-phase signals, impinging at the burstcell level, that

become expressed once the pause cell gate has been opened by collicular

signals. If interaction at a collicular level were responsible for the

kinematic effects, one would expect comparable effects in both components. To

explore the nature of visuo-vestibular interactions further, we have

investigated how the human saccadic system copes with the interfering effects

of ongoing horizontal nystagmus when attempting to generate oblique prosaccades

or antisaccades. The results suggest that voluntary and reflexive rapid eye

movements can be programmed in parallel. The outcome of this process shows a

degree of independence between horizontal and vertical components which is not

readily understandable in terms of interactions at a vectorial coding stage

such as in the collicular map.

Recent publications

Van

Beuzekom AD, and Van Gisbergen JAM (2002)

Collicular

microstimulation during passive rotation does not generate fixed gaze shifts.

J Neurophysiol , 87, 2946-2963, 2002.

Van Beuzekom AD, and Van Gisbergen JAM (2002)

Interaction

between visual and vestibular signals for the control of rapid eye movements.

J Neurophysiol., 88, 306-322, 2002.

6. Neural mechanisms for

the control of binocular eye movements in direction and depth.

Research group: Vivek Chaturvedi and Jan Van

Gisbergen

Introduction

It is generally assumed that, at a peripheral level, two distinct oculomotor

control systems are involved in the execution of binocular gaze shifts in three

dimensions. When the target movement calls only for a change in gaze direction,

the movement is controlled by the fast saccadic system. Target changes in depth

elicit slow vergence movements. When both subsystems are called into action by

a target step in 3D (direction and depth component) they do not act

independently: Their responses show non-additive dynamic behaviour in that the

vergence component is now clearly faster. The aim of this project was to

investigate various aspects of the coupling between the two systems relying on

both behavioural analysis and on neurophysiological studies.

Report We investigated the role of the

monkey superior colliculus (SC) in the control of combined saccade-vergence

movements by assessing the perturbing effects of microstimulation. We elicited

an electrical saccade by stimulation while the monkey was preparing a visually-guided

movement to a near visual target. We showed that that artificial intervention

in the SC, while a 3D refixation is prepared or is ongoing, can effect the

timing (WHEN) and the metric-specification (WHERE) of both saccades and

vergence. This effect would be expected if the population of movement cells at

each SC site is tuned in 3D, combining the well-known topographical code for

direction and amplitude with a nontopographical depth representation. For an

eye movement to a near target, stimulation at a rostral site leds to a

significant suppression of the pure vergence response during the period of

stimulation. When these paradigms were implemented for 3D refixations, the

saccade was inactivated, as expected, while the vergence component was often

suppressed more than in the case of the pure vergence. We conclude that the

rostral SC, presumably indirectly via connections with the pause neurons, can

affect vergence control for both pure vergence and combined 3D responses..

Recent publications

Chaturvedi

V, and Van Gisbergen JAM (1998)

Shared

target selection for combined version-vergence eye movements.

J Neurophysiol 80: 849-862

Chaturvedi, V. and Van Gisbergen, J.A.M. (1999)

Perturbation

of combined saccade-vergence movements by microstimulation in monkey superior

colliculus.

J. Neurophysiol. 81: 2279-2296.

Chaturvedi, V. and Van Gisbergen, J.A.M.

Stimulation

in the rostral pole of monkey superior colliculus: effects on vergence eye

movements.

Exp. Brain Res. 132:72-78, 2000.

7. Gaze control by

eye-head coordination

Research group: J.A.M. van Gisbergen, C.C.A.M.

Gielen

Introduction

Fixating a visual target during head movements requires specific eye movements

in order to compensate for movements of the eyes relative to the target.

Several sensory systems (e.g. semi-circular canals, otolith system, visual

system, muscle spindles in neck muscles) contribute to the accurate

stabilization of gaze on targets in 3-D space. The aim of this project is 1) to

investigate the characteristics of eye movements in gaze stabilization in

natural head movements and 2) to determine the role of various sensory systems

to gaze stabilization.

Report

We have investigated gain modulation (context compensation) of the

vestibulo-ocular reflex (VOR) for binocular gaze stabilization in human

subjects during voluntary yaw and pitch head rotations. Movements of each eye

were recorded both when attempting to maintain gaze on a small visual target in

a darkened room and after its disappearance (remembered target).

Linear regression analysis

on the version gain as a function of target distance yielded a slope representing

the influence of target proximity and an intercept corresponding to the

response at zero vergence (default gain). The slope of the fitted relationship,

divided by the geometrically-required slope provided a measure for the quality

of version context compensation ('context gain'). In both yaw and pitch

experiments, we found default version gains close to one even for the

remembered target condition, indicating that the active VOR for far targets is

already close to ideal without visual support. In near target experiments, the

presence of visual feedback yielded near unity context gains, indicating close

to optimal performance. For remembered targets, the context gain deteriorated

but was still superior to performance in corresponding passive studies reported

in the literature.

We examined how frequency

and target distance, estimated from the vergence angle, affected the

sensitivity and phase of the version component of the translational VOR (tVOR)

and compared the results to the requirements for ideal performance. Linear

regression analysis of the version-sensitivity relationship yielded a slope

representing the influence of target distance. The ratio of this slope and the

slope required for ideal stabilization provided a measure for the degree of 'distance

compensation' in the tVOR. The results show that distance compensation behaves

according to low-pass characteristics in each target condition. It declined

from 1.00 to 0.84 for visual targets and from 0.87 tot0.57 for remembered

targets in the frequency range 0.25 - 1.5 Hz. The intercept obtained from the

regression yielded the tVOR response at zero vergence and specified the

'default sensitivity' of the tVOR. Default sensitivity increased with frequency

from 0.02 to 0.10 deg/cm for visual targets and from 0.04 to 0.16 deg/cm in

darkness. The phase delays of version angular eye velocity relative to

translational eye velocity increased on average from 2 deg to 7 deg. In

comparison with earlier passive studies, the active tVOR in the dark performs

much better at all frequencies where comparison was possible. We conclude that

an additional nonvestibular signal with low-pass characteristics contributed to

the tVOR during self-generated head translations.

Recent publications

W.P.

Medendorp, J.D. Crawford, D.Y.P. Henriques, J.A.M. van Gisbergen, C.C.A.M.

Gielen

Kinematic

strategies for upper arm-forearm coordination in three dimensions.

J. Neurophysiol. 84, 2302-2316, 2000.

W.P. Medendorp, J.A.M. van Gisbergen, S. van Pelt, C.C.A.M. Gielen

Context

compensation in the vestibulo-ocular reflex during active head rotations.

J. Neurophysiol. 84, 2904-2917, 2000.

W.P. Medendorp, J.A.M. van Gisbergen, C.C.A.M. Gielen

Human gaze

stabilization during active head translations.

J. Neurophysiol. 87, 295-304, 2002.