|  |  |

| | | |

|  |  |

| | | |

|  |  |

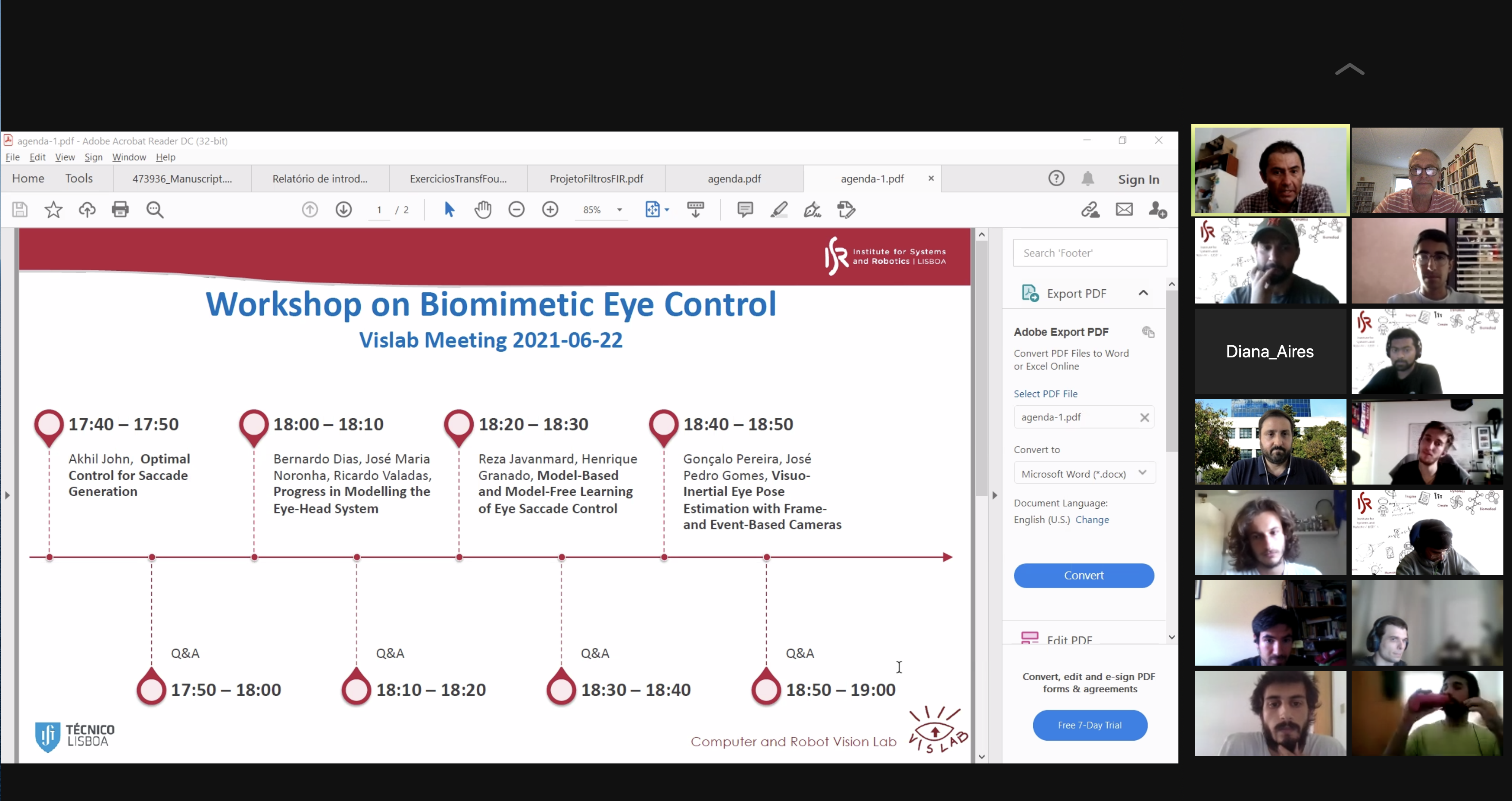

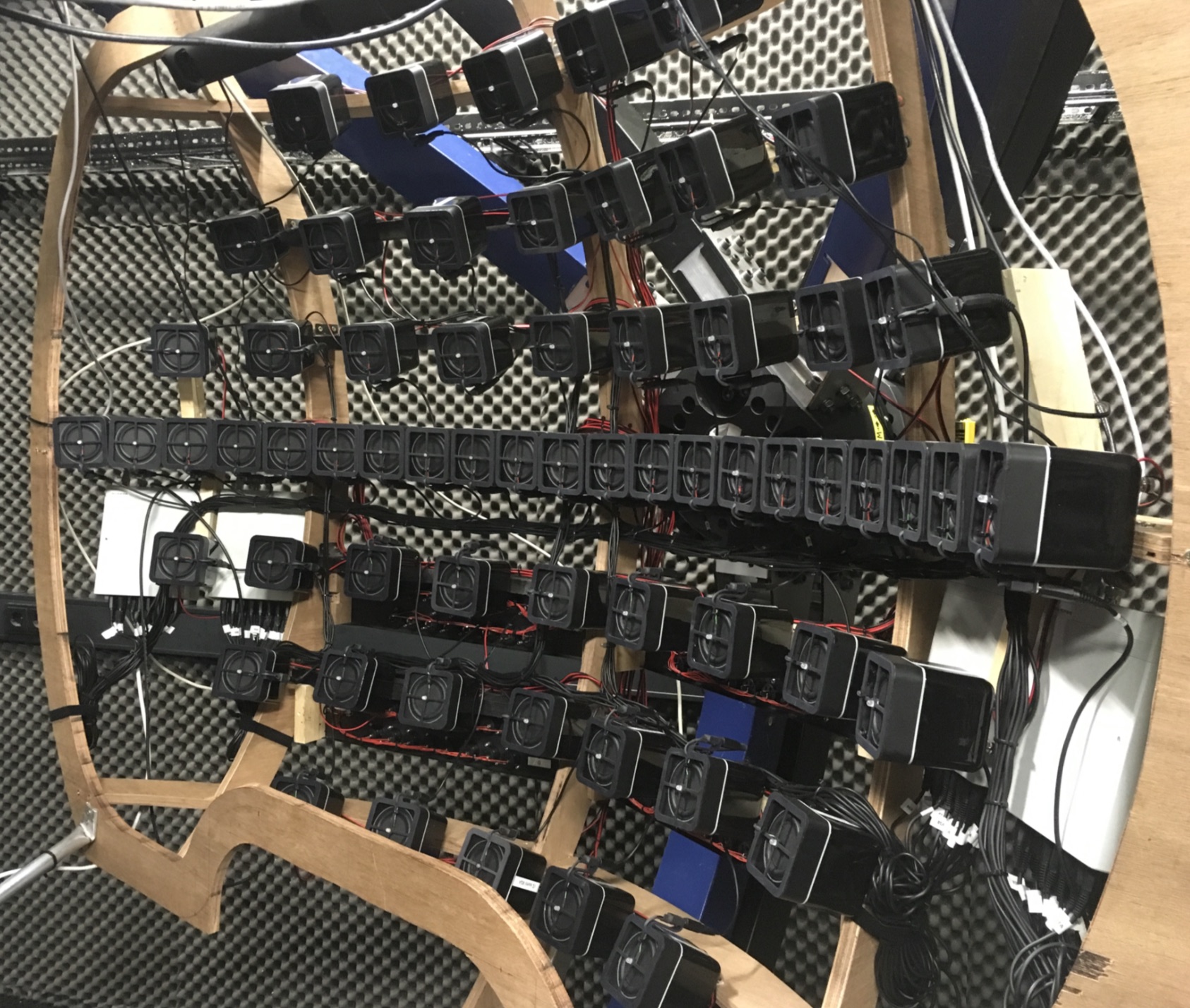

| | for our moving sound experiments (d.d. June 2021). | |

| Our experimental facilities and support staff | ||

|---|---|---|

|  |  |

| | | |

|  | |

|   |   |

Bachelor students | Bachelor students |

Master students |

|  |  |

| | ||

|  |  |

|   |     |

|---|---|---|

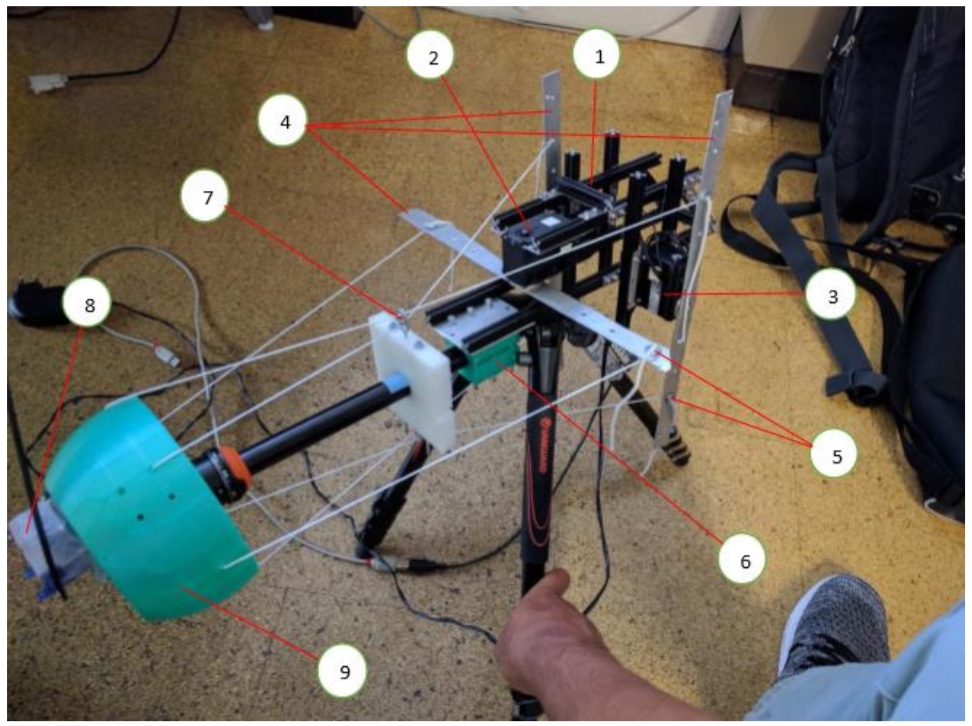

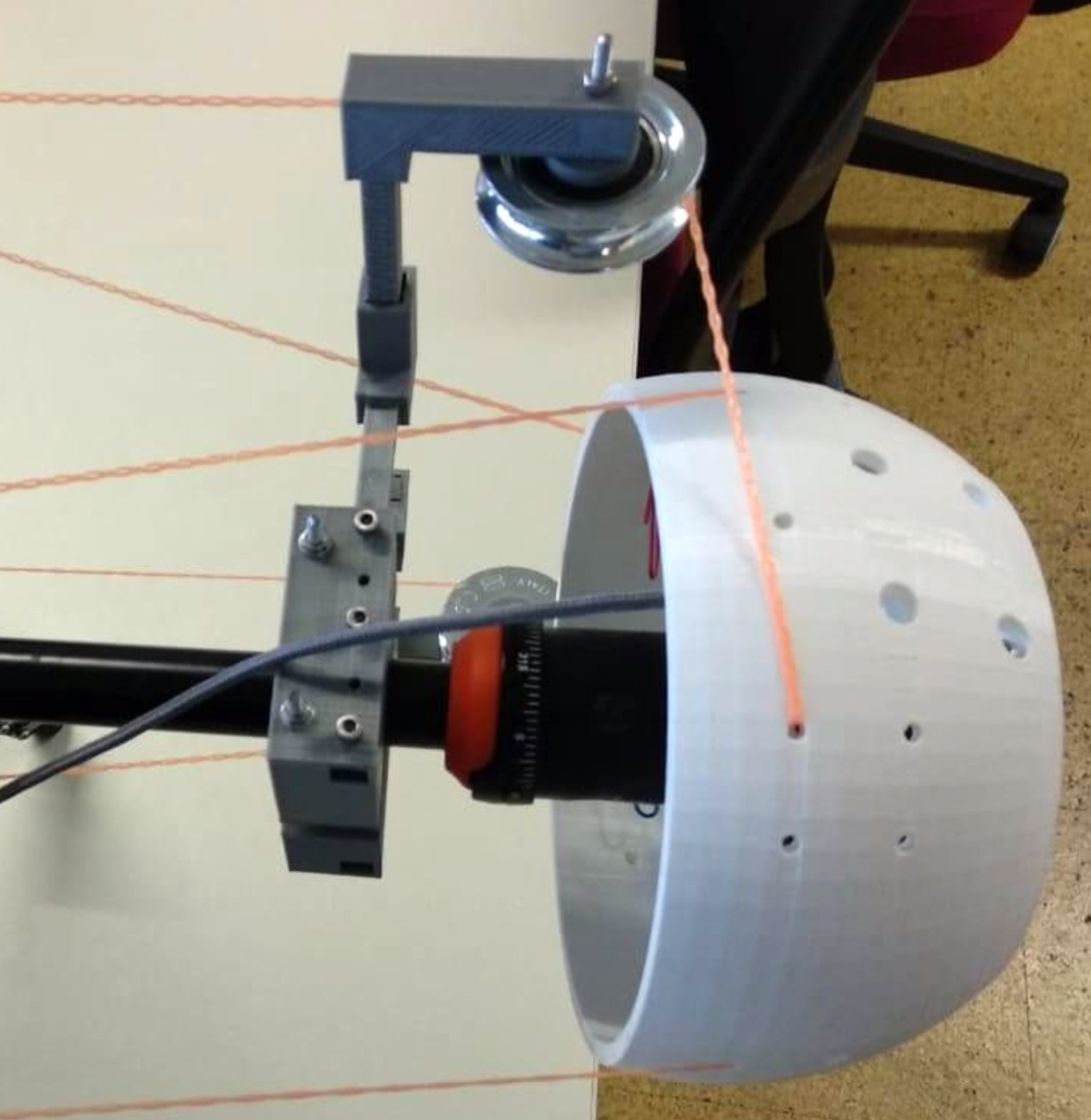

and his new eye-design | Eye with torsional pulley for Superior Oblique, and Rui Cardoso, master student, eye control | Bernardo das Chagas, master student, eye-head control and Gonçalo Pereira, master student, computer vision and control (sensor fusion) |

|   |     |

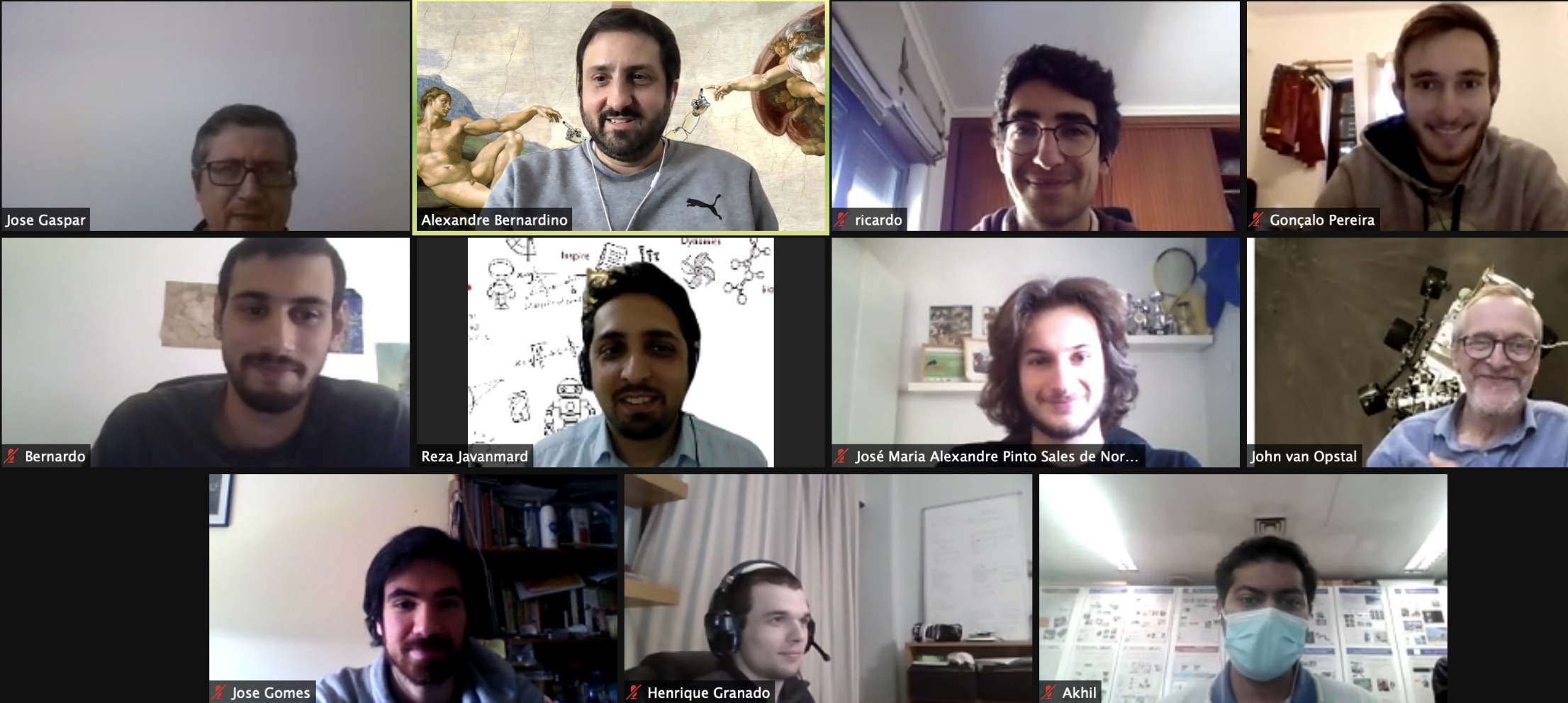

and Henrique Granado, student, works on model-free learning. | Jose Noronha and Ricardo Valadas Master students, modelling eye-muscle sideslip | John and Alex, proud supervisors of the EyeTeam.... Jose Gomes, master student, works with the event camera, and Jose Gaspar, PI, Visual navigation and calibration. |

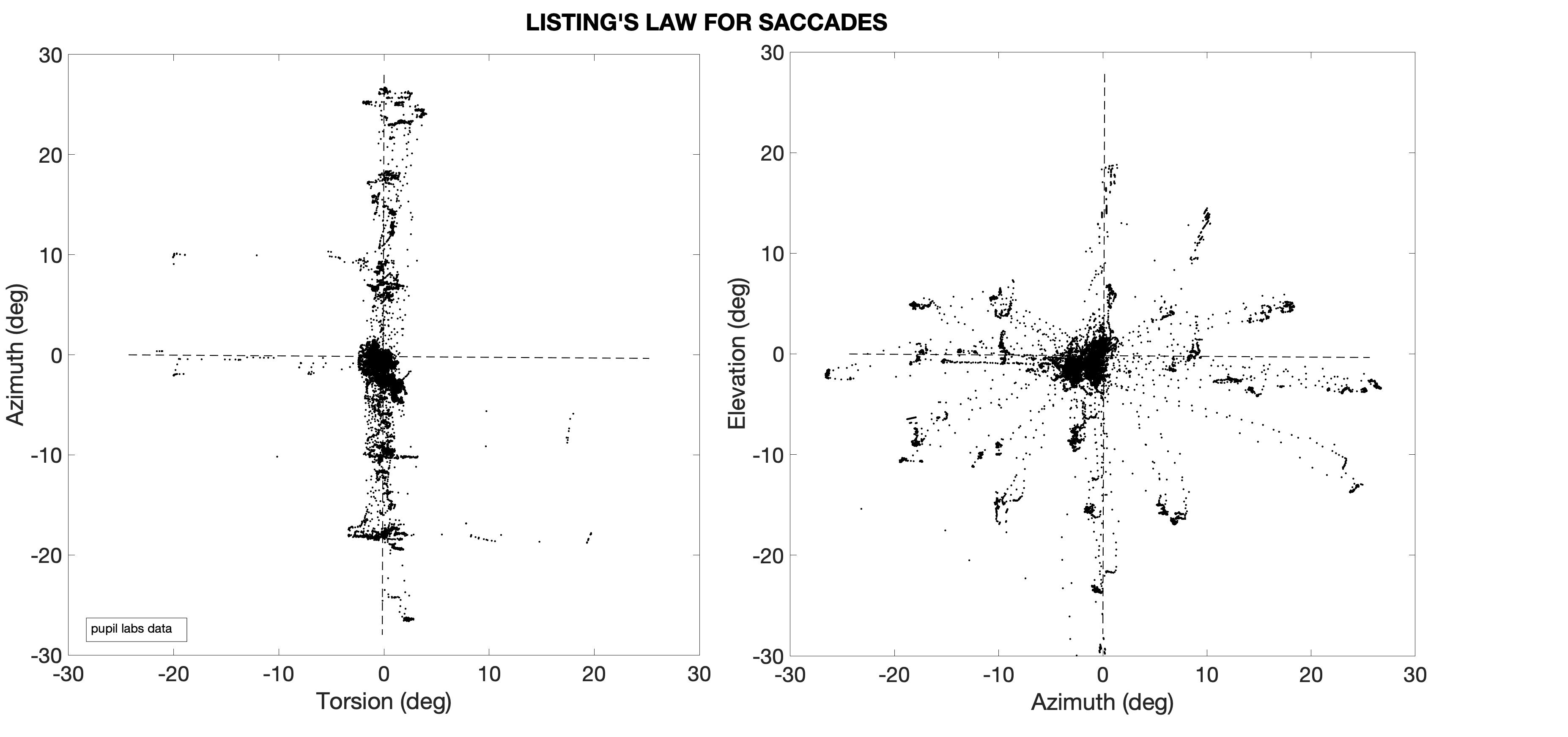

| Listing's Law, measured for head-restrained monkey saccades in 3D. In quaternion laboratory coordinates (r'x,r'y,r'z), Listing's plane (r'x = ar'y + br'z) has a width of only 0.6 deg.

The primary position (PP: (1, a, b)) points upwards (as the center of the oculomotor range, OMR, is about 15 deg downward); i.e., the plane is tilted re. gravity.

Figure taken from Hess et al., Vision Res., 1992. There is controversy about the origin of this two degrees of freedom (dof) behaviour: mechanical constraints (e.g., muscle pulleys) vs. neural (control) strategies. The following arguments support a control strategy:

|

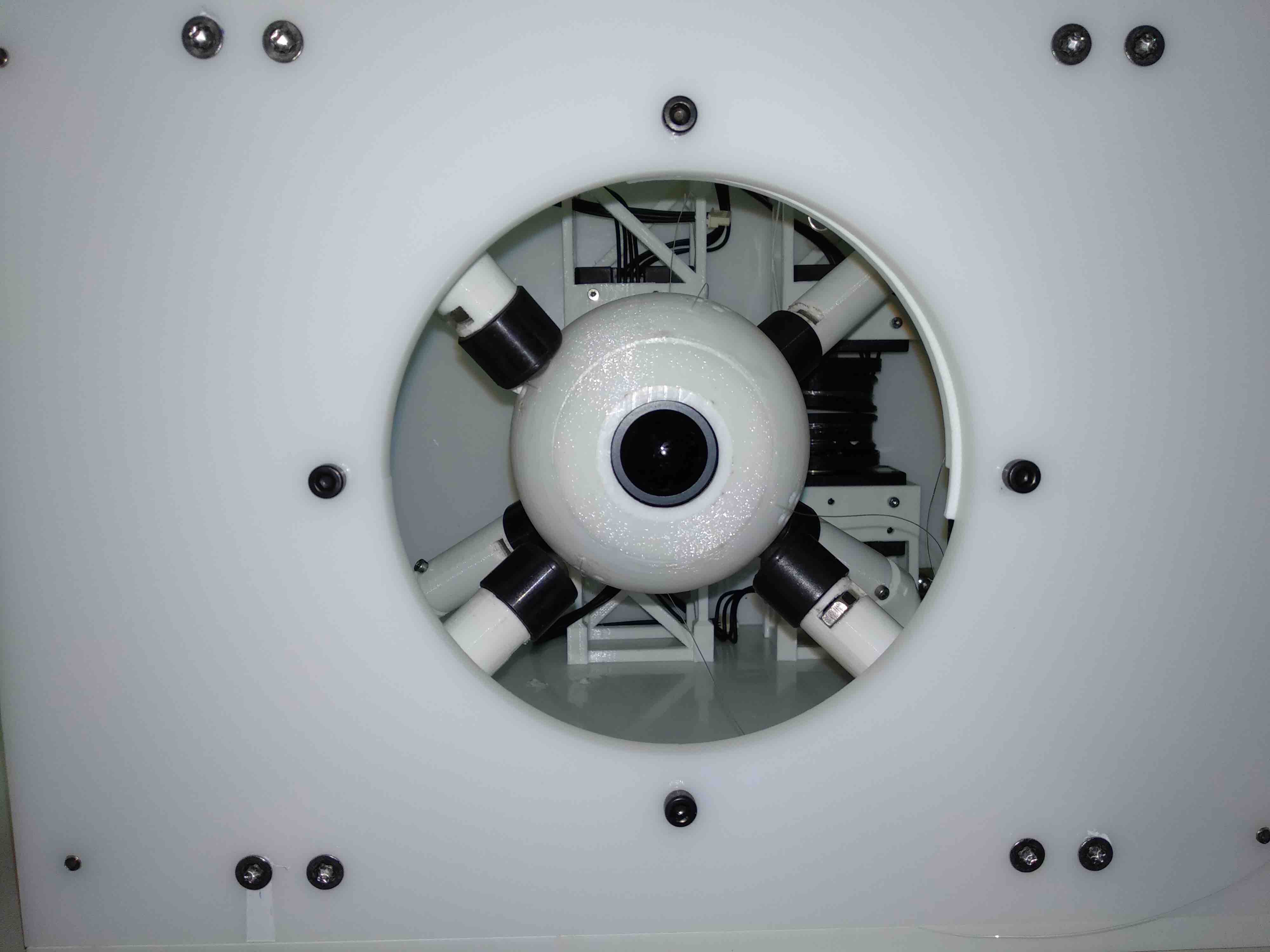

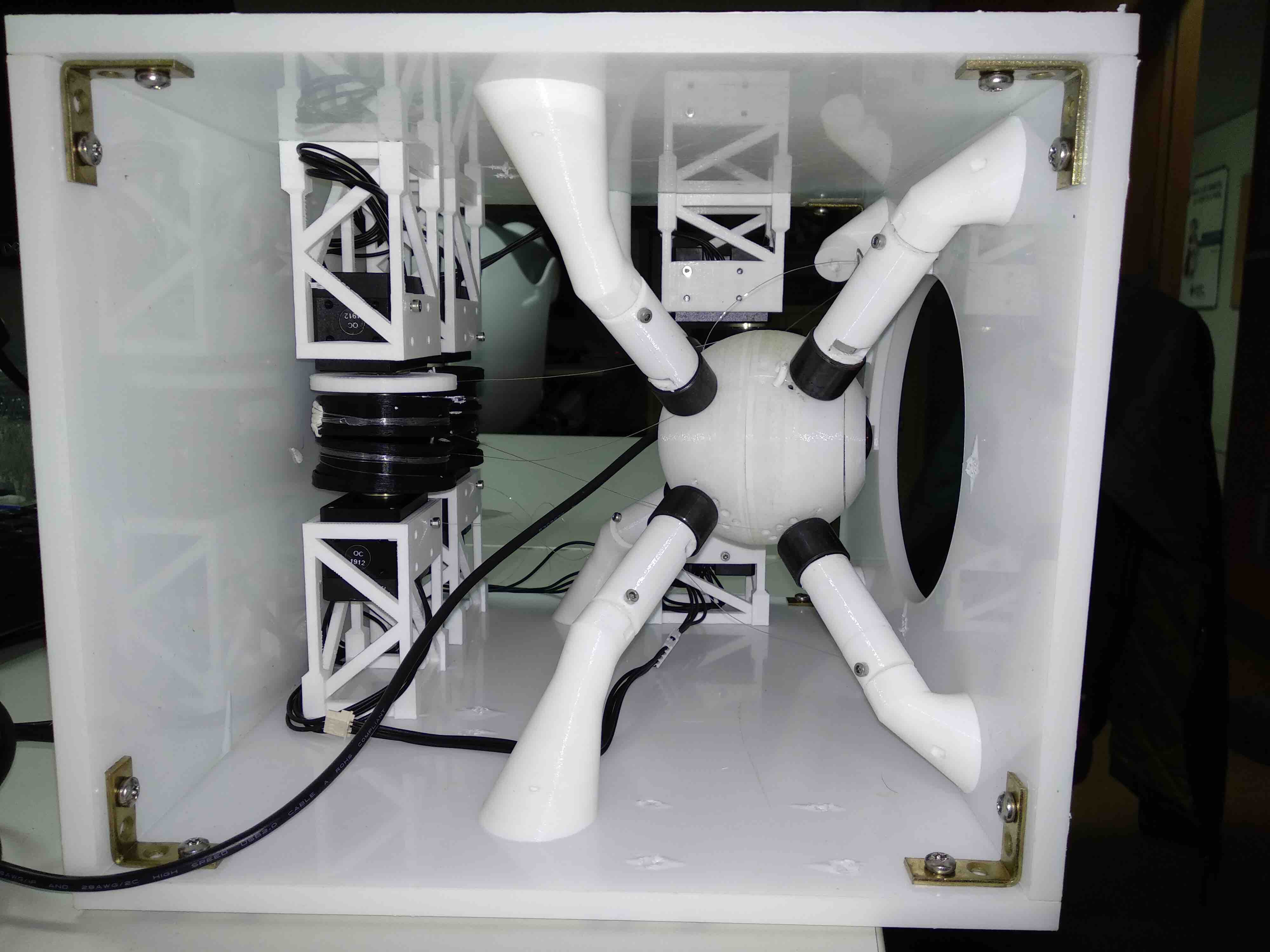

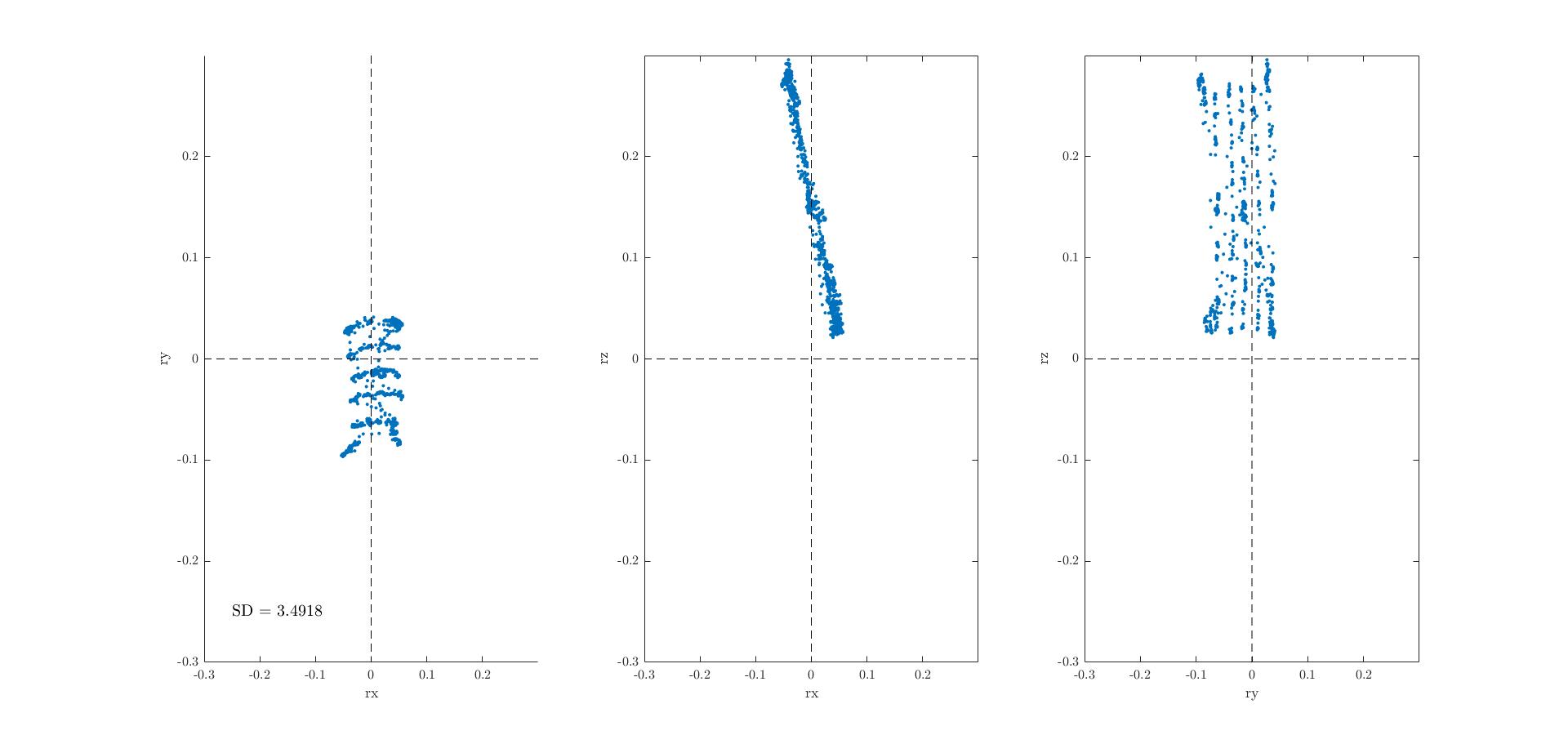

Computer simulations of random 3D saccades with a nonlinear mechanical model of our 3D robotic eye with three controllers, and 6 elastic 'muscles', at realistic insertion points on the globe (by Carlos). This model can generate 3D eye orientations over a large range (e.g., torsion up to 15 deg). Optimal control on a linearised version of this model is assumed to minimize the weighted sum of different costs. Here, we show two different strategies to account for Listing's law: Top figure includes three costs: saccade duration (p; time discount), total energy consumption during the trajectory (proportional to squared control velocity), and saccade accuracy; the latter requires the eye to be on the target T=(ry, rz) and in LP (i.e., rx = 0) at the end of the movement (at time p). PP is assumed to be straight ahead. Bottom figure includes four costs: duration (p), energy, saccade accuracy (but now only looking at the 2D target location; torsion left free), and total muscle force at each fixation (at p). Both models yield 3D single-axis rotations to generate saccade trajectories that keep the eye quite close to LP! The latter even yields a downward titled plane (dotted yellow line; the central OMR is at about 15 deg upward from PP), due to the force of gravity acting on our (macro-eye) system. |

Interestingly, the saccades followed nearly straight trajectories (i.e., they synchronized the 3D motor commands (top)), and yielded velocity profiles that obey the well-known nonlinear saccade main sequence (increase of saccade duration with amplitude, saturation of peak eye velocity with amplitude, and roughly a fixed-duration acceleration phase), although the model is (at present) free of (multiplicative) noise. |

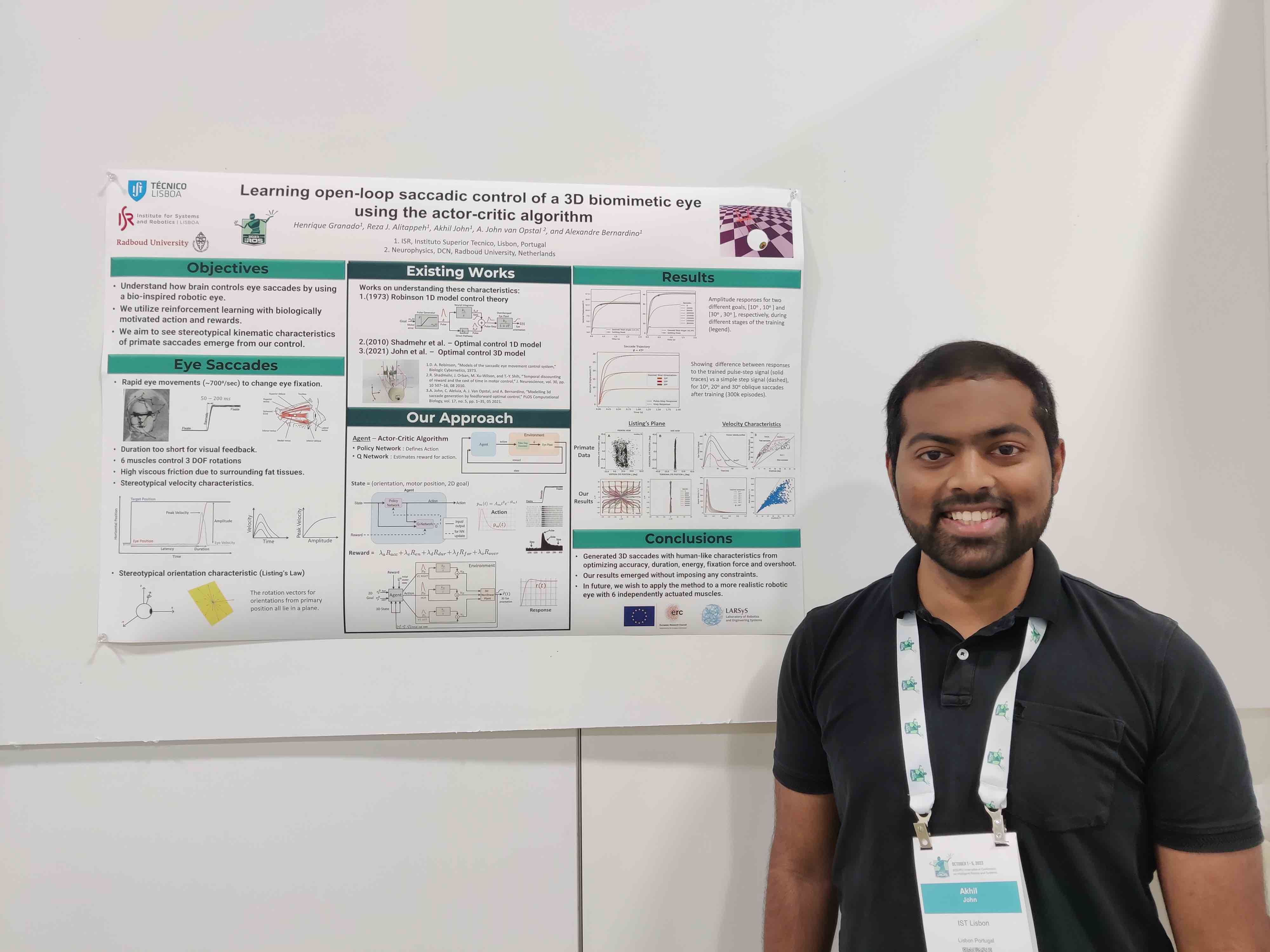

see this Website for Henrique's poster video talk |

and this Website for Bernardo's poster video talk |

| |

| His video presentation can be viewed HERE |  |

| |

elevation). Each speaker also has a central LED for visual stimulation. | while under passive two-axis sinusoidal vestibular rotation. |